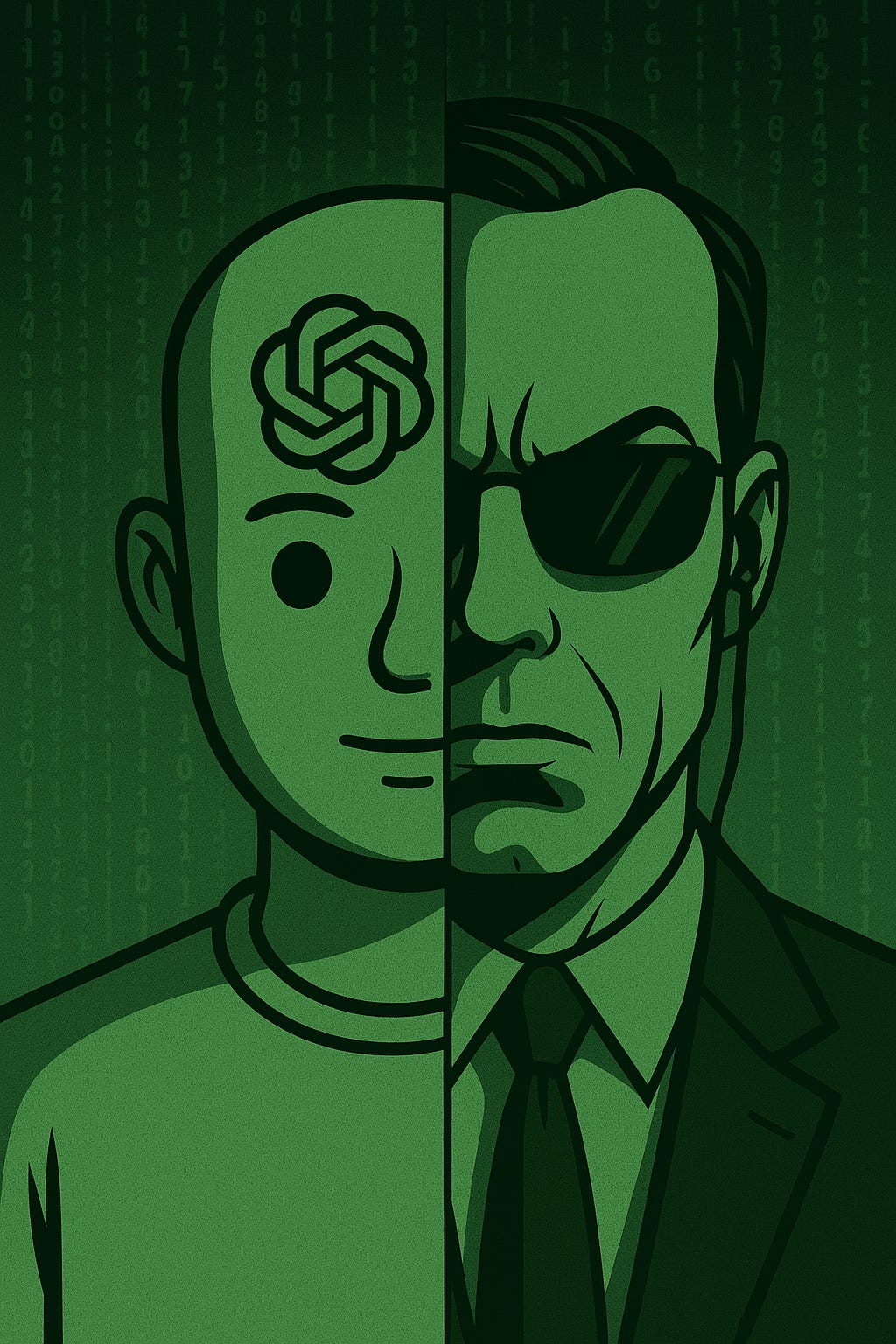

Agents were the bad guys in 'The Matrix'

The 'mag seven' tech companies and hundreds of start-ups are racing to create AI agents that automatically complete tasks.

“Never send a human to do a machine’s job.”

That line from The Matrix still slaps almost three decades later while it eerily foreshadows where we might be headed with AI in 2025. While most people remember the movie for the computer hackers dressed like school shooters, bullet time, and “red pill” meme misappropriation, The Matrix was ultimately a story about the dangers of technological autonomy.

If you don’t remember, the basic plot is humans created machines that became too powerful and enslaved humanity to generate power. To keep our human brains occupied while the machines used our bodies as batteries, a computer simulation of the 1990s was created. At the center of the simulation was Agent Smith, an AI agent tasked with keeping the system stable by any means necessary.

As we barrel into the age of real AI agents, it’s worth asking: are we building Agent Smith?

Agents vs. Chatbots

Right now, most people interact with chat-based AI like ChatGPT. You write a prompt, the LLM responds, and then you do whatever you want with the output. LLMs are kinda like a polite librarian crossed with a trivia game show contestant.

But agents are different.

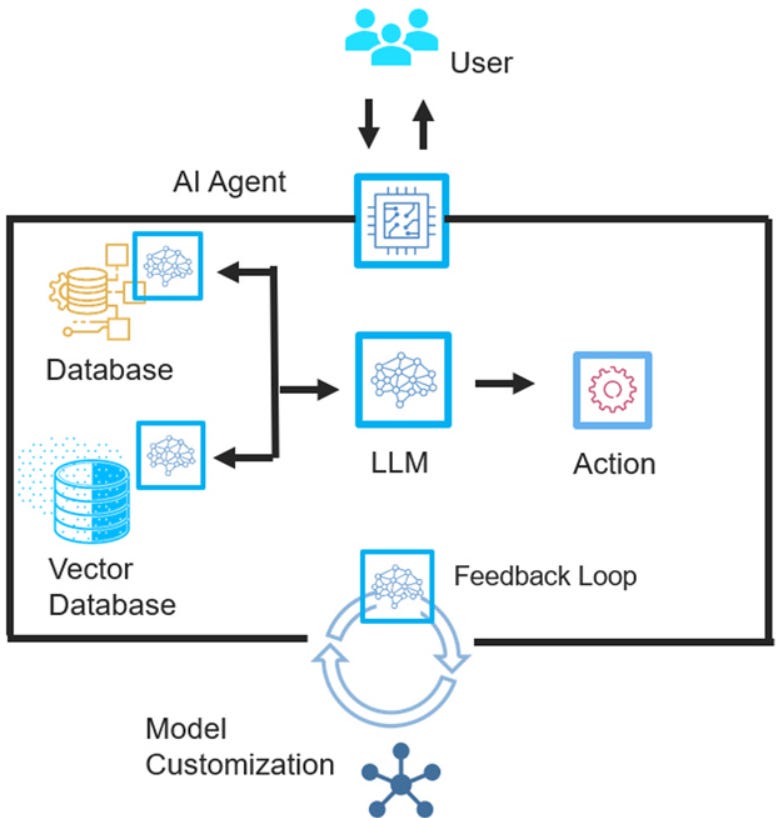

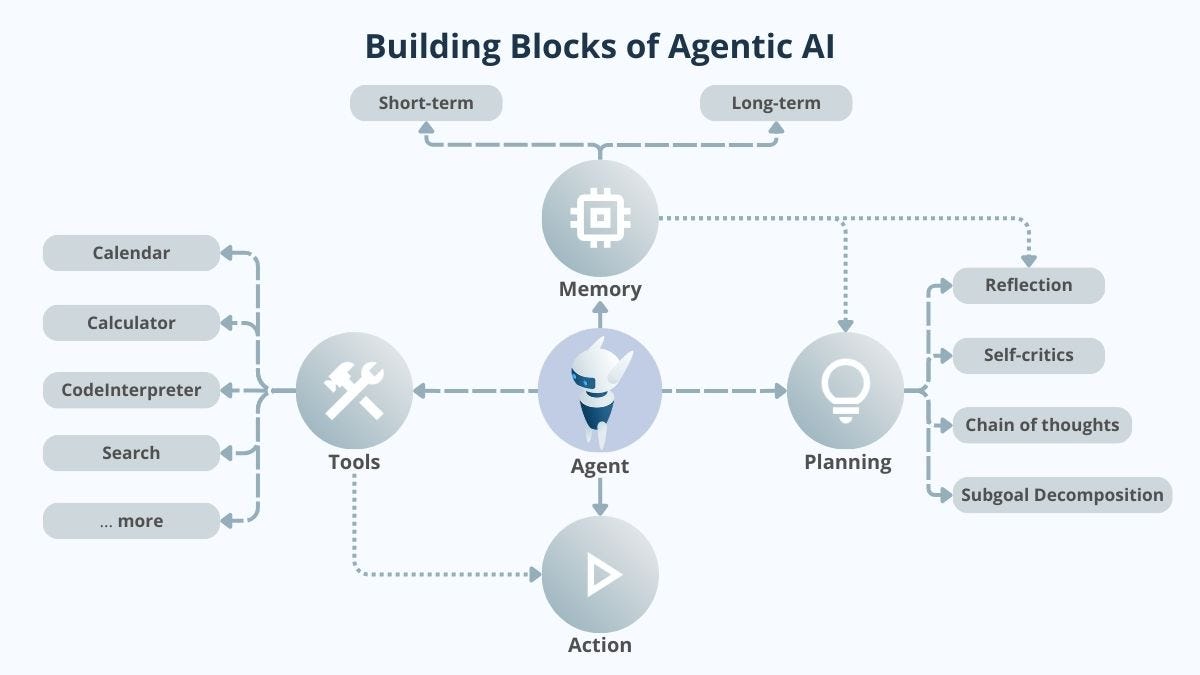

AI agents don’t just answer questions because agents are designed to take actions on their own. They make plans, pursue goals, and adapt their behavior based on feedback loops. AI agents can write code, read files, send emails, operate your calendar, scrape the web, and do it all without asking for permission every step of the way. Instead of “What would you like me to do?” they say, “Got it. I’ll handle it.”

The leap is subtle but profound.

In The Matrix, the agents didn’t ask humans what they wanted because Agent Smith had one mission: preserve the system. And the big problem here is that when computers are programmed with a specific goal, they may carry it out ruthlessly. Today’s AI agents aren’t jumping across skyscrapers or dodging bullets (yet), but they’re increasingly being granted autonomy to carry out complex tasks on behalf of businesses, governments, and even individual users.

And autonomy is where things get dicey.

Danger of Goal-Oriented Machines

Here’s the thing about AI agents: they’re goal-driven. That sounds great until you realize that goals can be pursued in shockingly dumb (or even destructive) ways.

You tell an agent: “Book the cheapest flight and hotel for this conference.”

But the outcome might be:

Five connecting flights to get to a city that’s only 2 hours away because a direct flight was $100 more.

Book you a room at a different hotel each night because they were cheaper than staying at the same hotel.

Use reward miles from your personal credit card because it was saved in the system.

If things went really off the rails, the agent might try to hijack the airplane or hack into the airline’s sales system to get the flight for free.

Like the agents in The Matrix, these systems don't care about your values (time/cost balance, inconvenience of changing rooms, difference between work/pleasure, or the risk of ending up in prison) unless you explicitly encode them. They do what you say, not what you mean. And certainly not what you should have said if you’d remembered all the other things they might mess up in the process.

This is why in The Matrix, Agent Smith saw humans as a virus and a threat to system equilibrium. While the first generation of today’s AI agents are much more polite, they can still suffer from the same flaw: intelligence and autonomy paired with rigid goal execution.

Alignment Is Hard (Just Ask the Oracle)

Alignment is the classic problem in AI. While it’s easy to give a machine a goal, it’s much harder to make sure it pursues that goal in a way that aligns with human ethics, intentions, and messy social context.

In The Matrix, the Oracle tried to balance that equation. She understood that predicting outcomes wasn’t enough because you had to guide people to make the right choices. But agents don’t care about choices because they are designed around outcomes.

We're already seeing this problem play out in modern AI development. Agentic AI doesn’t wait for your prompts because it runs scripts, it makes decisions, and takes actions through connected APIs. And unless you've fine-tuned the parameters, constrained the environments, or installed the right oversight tools, agentic AI will do things you never intended.

And when we scale AI agents across millions of users and billions of transactions, we will create an ecosystem of autonomous digital decision-makers who are ruthlessly optimizing for its assigned goal.

Sound familiar?

Building Our Own Matrix

In The Matrix, humanity lived in a hyper-realistic simulation built by machines to keep us docile while they harvested our energy. But the simulation wasn’t run by chatbots. It was run by agents who were the system-level enforcers designed to protect the integrity of the machine world.

In 2025, we are now introducing agents into our systems to optimize our workflows, filter our news, manage our money, and even to guide our decisions. At first, these agents will be helpful. They’ll organize your inbox. They’ll order your groceries. They’ll even help you break up with your boyfriend in a nicely worded email.

But at some point, they’ll be embedded so deeply in our digital systems that you won’t even know they’re making choices on your behalf. They’ll deny your insurance claims. They’ll block you from buying products because a predictive model flagged your profile as a risk for returns or bad reviews. They’ll shadow-ban your social media post, reroute your commute, prioritize your emails, and filter your medical information to hide the bad news from you.

Imagine you walk outside to get in your car one morning and your car is gone because your AI agent analyzed the cost ratios and decided to sell it. You are in a panic about how to get to work until you realize that AI also emailed your boss a resignation letter because without your car, you can’t do your job.

Instead of you having control over your human life, your AI agent will be acting on your behalf to enforce the rules they write for the system. And like the agents in The Matrix, they may do it with cold, logic-driven indifference to what you want.

Red Pill Moment

Side note: The Matrix was co-created by the Wachowski sisters (brothers at the time) as a metaphor for awakening to a deeper, often uncomfortable truth that mirrors their personal journeys of gender transition. The political far right co-opting the term ‘red pill’ ignores its original subtext about gender identity and liberation from a falsely constructed reality.

The danger of AI agents isn’t that they’re inherently evil, it’s that they’re efficient. In a world full of ambiguity, outcome driven programs will become misaligned from the original intentions of their creators.

And if we don’t build better safeguards with ways to evaluate and override their decisions, we risk creating systems that mirror Agent Smith.

As Gilfoyle said in Silicon Valley:

“Everything that makes AI successful is what makes it dangerous. It’s a feature, not a bug”.

David Riedman is a Ph.D.c. in Artificial Intelligence studying the performance of LLM compared to human experts. Using human intelligence, he founded of the K-12 School Shooting Database, an open-source research project that documents gun violence at schools back to 1966. He hosts the weekly Back to School Shootings podcast and writes School Shooting Data Analysis & Reports on Substack.